rationalism

30 Oct 2021 - 27 Jul 2025

- [a note on terminology: "rationality" vs "rationalism"]

- Rationalism is a movement of nerdy types (in both the best and worst senses), centered around the LessWrong website. Should be distinguished from small-r rationalism, which is just a philosophical position. Rationalism goes beyond the small-r version in that it is also a self-help movement that tries to promote what it considers better ways of thinking and being. Also, LessWrong rationalism is closer to empiricism than the philosophical rationalism of say Spinoza

- It has a very particular theory of what that means, comprising a theory of knowledge (representational objectivism) and of action (optimizing aka winning). Both of these theories seem extremely weak to me, in that they don't adequately describe the natural phenomena they are supposed to be about (human intelligence) and they don't serve as an adequate guide for building artificial versions of the same.

- Nevertheless they manage to do a lot of interesting thinking based on this inadequate framework, and they attract smart and interestingly weird people, so I find myself paying them attention despite my disdain for their beliefs. A lot of this text is about me trying to work out this contradiction.

- The other component of Rationalism is a belief that superintelligent AI is just around the corner and poses a grave ("existential") threat to humanity, and it is their duty to try to prevent this.

- Rationalists have founded MIRI (the Machine Intelligence Research Insititute) to deal with this problem; and CFAR (Center for Applied Rationality) to promulgate rationalist self-improvement techniques. They are also tightly connected to the Effective Altruism movement. They've attracted funding from shady Silicon Valley billionaires and allies from within the respectable parts of academia. And they constitute a significant subculture within the world of technology and science, which makes them important. They are starting to penetrate the mainstream, as evidenced by this New Yorker article about some drama on the most popular rationalist blog, SlateStarCodex.

- [update 2/13/2021: the mentioned New York Times article finally dropped and it seems pretty fair.

SlateStarCodex was a window into the Silicon Valley psyche. There are good reasons to try and understand that psyche, because the decisions made by tech companies and the people who run them eventually affect millions.

- Rationalists occasionally refer to their movement as a "cult" in a half-ironic way. It has a lot of the aspects of a cult: an odd belief system, charismatic founders, apocalyptic prophecies, standard texts, and a certain closed-world aspect that both draws people in and repels outsiders. But it's a cult for mathematicians, and hence its belief system is a lot stronger and more appealing than, say, that of Scientology.

- The NYT article has a quote by Scott Aaronson (a Rationalist-adjacent mathematician):

They are basically just hippies who talk a lot more about Bayes’ theorem than the original hippies.

- Now, this is quite true in that Rationalists constitute a subculture and have established a network of group houses, have a lot of promiscuous sex (aka "polyamory"), and are into psychedelics. On the other hand in Meditations on Meditations on Moloch I find that they've inverted some key hippie attitudes, for better or worse. They embrace what the hippies rejected and want to build a world on different principles.

Various Critiques

- My own past writings

- David Chapman's (aka Meaningness) efforts to critique rationalism and replace it with a more powerful and realistic metarationality; My own views were deeply influenced by his work, which includes a much more systematic and thorough analysis of the problems of rationalism than anything here.

- Another very different critique may be found in Elizabeth Sandifer's Neoreaction: A Basilisk which draws out the connections between rationalism, neoreactionary politics, and quasi-Lovecraftian hyperstition .

- SneerClub is a Reddit group for taking potshots at the Rationalist community; often unfair but occasionally insightful.

- Topher Hallquist finds a surprisingly anti-science bent in Rationalist core writings.

- Response by SlateStarCodex here

Some admirable things about Rationalists

- They are super-smart of course. They seem to attract mathematicians who are too weird for academia, and we sure need more people like that.

- Their ideas tend to be simple, precise, and stated with extreme clarity.

- They want to save the world and otherwise do good.

- They are serious and committed about putting their ideas into practice.

- They are very reflective about their own thinking, and seek to continually improve it.

My major gripes

- Objectivist model of mental representation representational objectivism

- Assuming that the overarching goal of life is "winning"

- A shallow view of embodiment

- Overly mathematical (confusing map with territory)

- Occasional extreme arrogance

- A sort of impenetrable closed-world style of self-justification.

- Retro taste in ideas and sometimes esthetics.

- Sloterdijk made a good crack (in You Must Change Your Life) about small-r rationalists; he called them "the Amish of postmodernism". Of course if that metaphor holds, then I should leave them alone to their quaint and deeply held beliefs, which might end up being superior to the mainstream for long-term survivability.

- Connections (socially and intellectually) to unpleasant political movements like libertarianism, objectivism, and neoreaction, fueled by an antipolitics stance that is ultimately shallow.

- Taking as axiomatic things that are extremely questionable at best (orthogonality thesis, Bayesianism as a theory of mind).

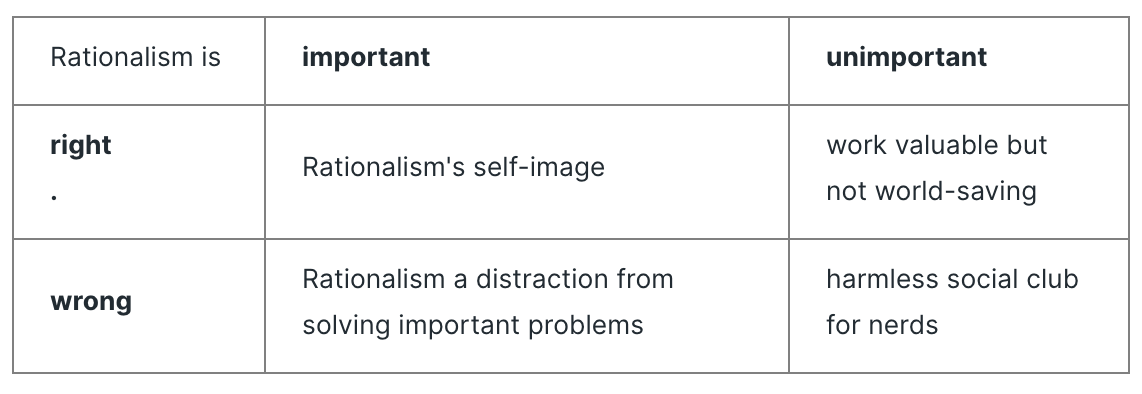

A Rationalist 2x2

- OK, this was really just an experiment to see if I could make.a 2x2 table in Roam and yes, I could and it was pretty easy!

- The upper-left and bottom-right quadrants are pretty self-explanatory.

- The top-right is a bit contradictory because the claim of importance is central to Rationalism. They believe they are literally saving the world from likely destruction by superintelligent AIs, and what could be more important than that? But they could be wrong about their importance while still producing intellectual value, so this represents that possibility.

- The bottom-left represents the possibility that Rationalism is not only wrong, but harmful, in that it distracts smart people from working on real problems, and to the extent it becomes a dominant ideology in the tech world its becomes that much more harmful. Also to the extent that Rationalism is an ally of bad political ideas (considerable), it's not just a harmless nerd social club.