Acid Horizon/David Bentley Hart, We Are Not Software

08 Mar 2023 - 03 Jul 2025

- A podcast episode with David Bentley Hart, via WS Discord.

tl;dr

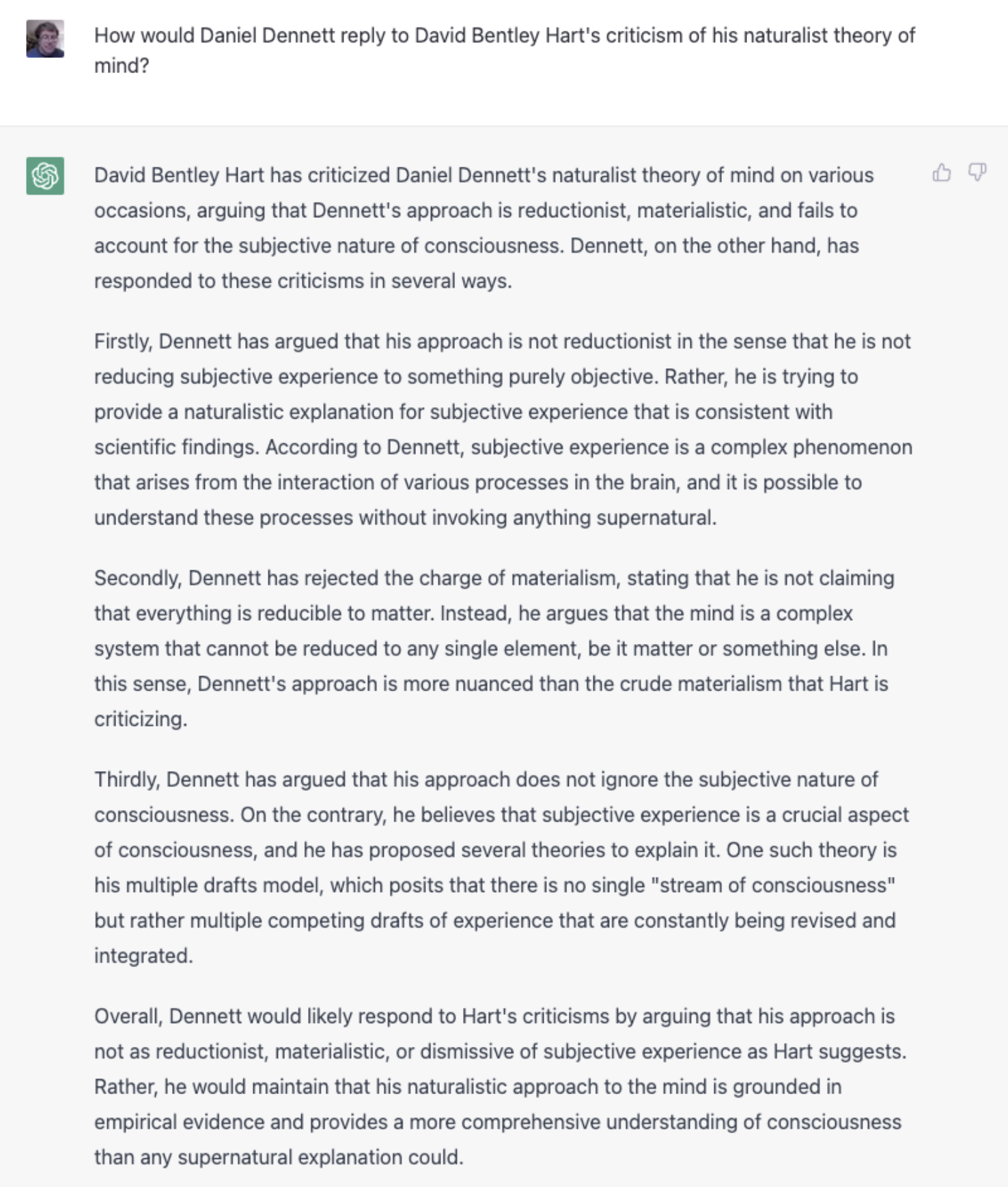

- Saying "we are not software" is dumb and useless, unless you have some better model of what we are. He doesn't have one, since he basically accepts the standard religious model of the soul. He's a theist at war with atheistic materialism, but theism tells you nothing about how the mind works.

Tone

- Man did I dislike this. Not so much for its object-level content – he is opposed to all mechanical or naturalistic models of the mind – which, OK, none of them are without problems and if you want to reject them utterly in favor of something non-mechanical and non-decomposible (a soul, basically), well in truth you do have 2000 years of western thought and everyday experience on your side.

- No, it's something about his tone. He really hates not just materialism, but materialists themselves. Dennett and Dawkins come in for particular rancor (he thinks Chalmers is OK, barely). He thinks their doctrines are obviously absurd, and thus by implication anyone who advocates them must be either stupid or evil.

- Now, there's been plenty of contempt in the other direction, that's what the whole new atheist movement was about, ridiculing theists like Hart, so if he reciprocates the feelings, it's understandable. The Soul Gained and Lost by Phil Agre describes exactly how technical communities exclude humanists and their concerns

- But I find that attitude tedious and unproductive. A good philosopher, I would think, is capable of modelling the views of his opponents – to understand how they think, which you kind of have to do if you are going to identify where they go wrong. But Hart literally cannot imagine what it is like to hold a materialist viewpoint, and so misunderstands what it is and how it works (he doesn't really understand the dynamics of evolution or biological function), and can only mock them. He's combative, but not in an interesting way.

Idealism

- There was a line around 19:45

function is more important perhaps than uh what's going on behind the functions as the as the algorithm becomes more and more sufficient there's never going to be a conscious agency there you know unless the computer is possessed by a demon or something ...

- Well OK not sure how serious he was, but to take this at face value means he's more ready to grant reality to a demon possessing a machine than the machine itself having agency. Which is perfectly consistent with the dualist position I guess. Meat Machines can't think either, unless they are ensouled.

- Around 42:00

the mystery of mind is purely modern philosophical question; there's no mystery of Mind in in antique philosophy East or West; there's a mystery perhaps of matter there's a mystery of the relationship of of or what bodies are; consciousness mind, that's the fundamental reality.

- OK, yes, he's an idealist, not a materialist. And for him the mind is not a mystery, but a given. It's the body, and the embodiment of mind, that is mysterious, although why it should be offensive to theorize about that, I don't know (that is what Dennett and the cogsci he channels is doing, basically).

- He's kind of right about syntax and semantics but not about what it implies. Computers don't have "a unified field of experiences" like we do...well, we don't either, we just think we do.

- One area where I did partly agree with him – there'e a deep relationship between all this metaphysics and politics. But he's wrong about implications, or perhaps we are just not quite on the same team. He identifies computation with capitalism and bourgie rationality, which is fair enough, but his own metaphysics is squarely aristocratic, the mind/body split echoing the aristo/worker split. I'm on the team that wants to overthrow that oppressive split, which probably requires a separate essay.

- For more critique, see Marvin Minsky/on Philosophers

Ignorance

- As for software, I can safely say I know more about what it can or can't do than a philosopher, having worked with it for almost half a century now. The tl;dr for that is that software by definition can do anything we can specify precisely, that is what a computational process is, pretty much. As to what we can specify, that is growing as our tools for expressing programs get better. The recent spurt in AI capabilities does not directly prove Hart wrong (the AIs aren't really very intelligent or agent-like), but they do prove that we don't yet know the limits of what computation can do.

Caveat

- AI has a long history of ignoring its philosophical critics, thinking that technical skill trumps their tired and ancient abstractions. This is sometimes valid, but two of the classic philosophical critics Hubert Dreyfus and John Searle actually were worth paying attention to, it turns out. The same might be true of Hart, although I can't quite see it.

What do I think

- Mind/body dualism is the root of all philosophical confusion. Hart has the classical version of this disease; computation was supposed to gloriously overcome it but kind of got lost along the way. I don't have any great tricks for seeing past it, except to note if you overemphasize one to the neglect of the other, you are probably doing something wrong. Eliminative materialists and radical idealists are equally wrong in this way. See also dumbbell theory or the section on teleology in Weird Studies/Tarot/Wheel of Fortune.

- Software, or computation, cybernetics, cognitive science and information technology more generally, is a way of integrating the mental and the physical. It aims to transcend the gap between mind and body that has bedeviled western thought since its inception. A computer is precisely a process that is both physical and semantic – just like a human! Well, not quite "just", but it is closer to that than anything else. This make software interesting and relevant for understanding the mind (although one should take AI claims with large doses of skepticism).

Elsewhere

- Somebody else unimpressed with Hart's aggressive style The Fallacy of Unnatural Deceleration? — Crooked Timber