AI Learning

18 May 2023 - 21 Nov 2025

- To read/watch

- Transformer papers

- Workshop on AI and the Barrier of Meaning

- Josh Tenenbaum

- Human is not the asymptote of scaling an ML process

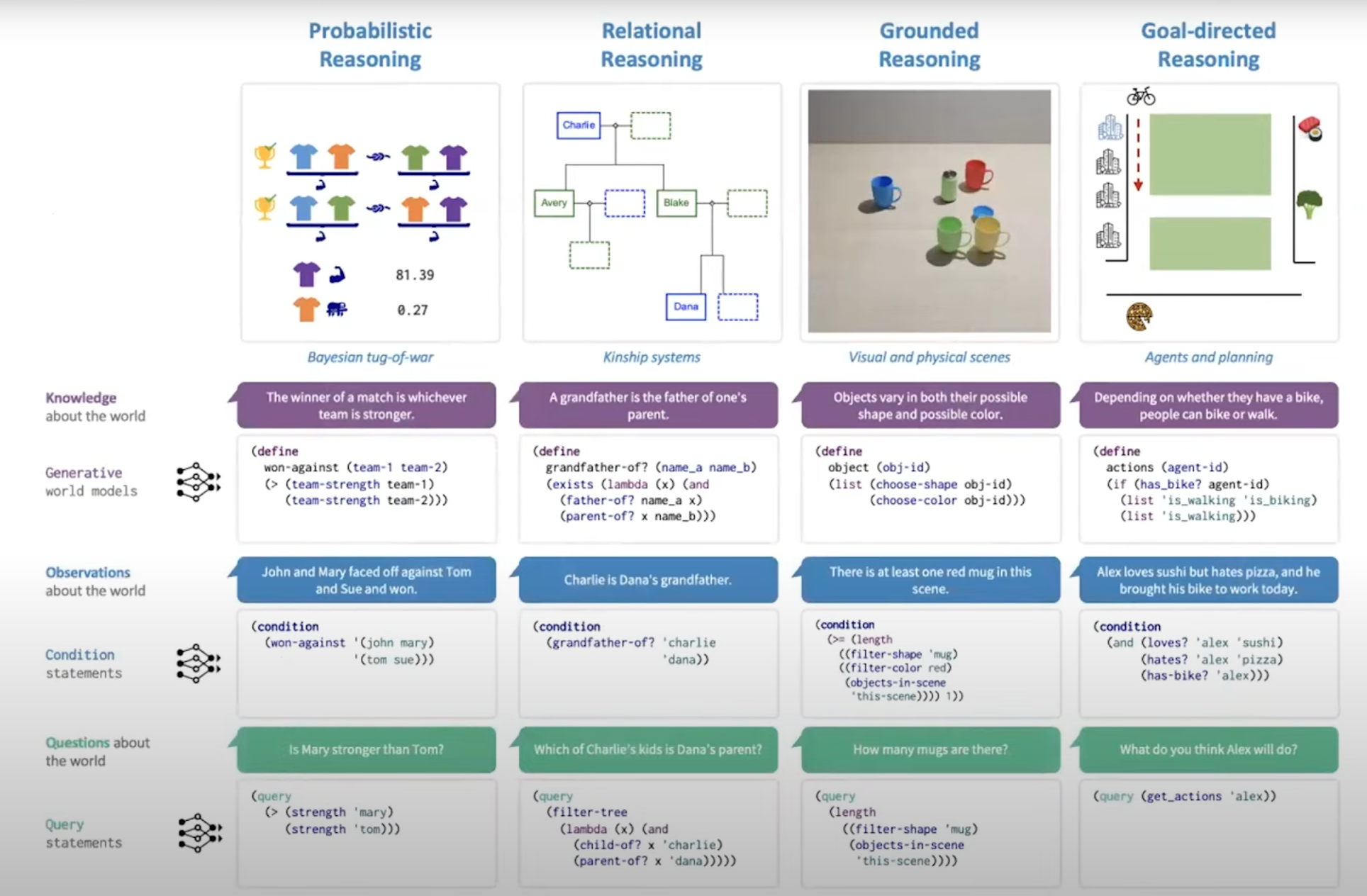

- Bayesian PP advocacy. Yay.

- Ref: Concepts in a Probabalistic Language of Thought, Goodman, Tenenbaum et al

- @The Child as Hacker

- laster work integrating with LLMs?

- Anna Rogers, computational linguist

- bailed, seemed boring

- Blaise Aguery y Arcas

- maximum entropy, active inference hypothesis, both suggest that intelligence is in fact text token prediction

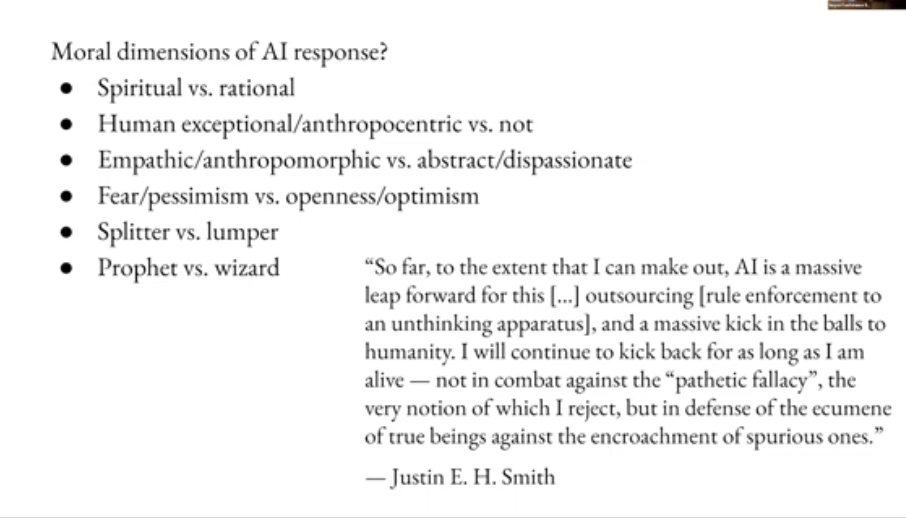

- JEHS is "a dear friend". Some digs at Gary Marcus. Back to McCulloch-Pitts

- ref An error limit for the evolution of language, Nowak, Krakauer et al

- Embodiment: he seems skeptical

- Ref: Looped Transformers as Programmable Computers, Giannou et al

- He thinks people are "pretty much sequence models" but acknowledges that's a rare opinion.

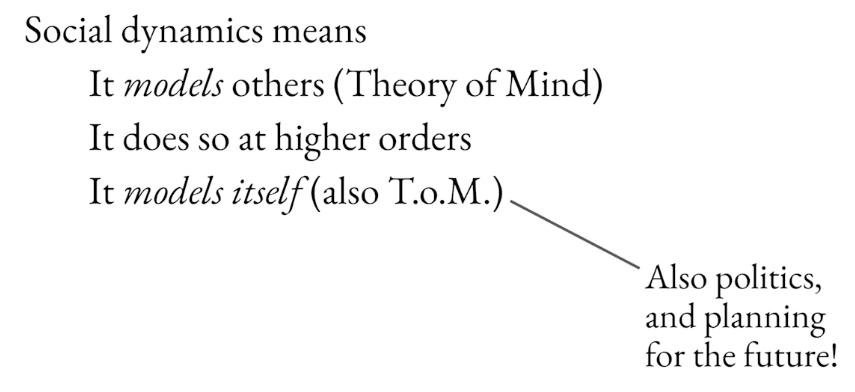

- Social dynamics means theory of mind therefore modeling self (yes this is obvious but rarely said) Robin Dunbar on higher-order therories of mind.

- Anna Ivanova, MIT, Language Understanding goes beyond language processing

- Tal Linzen, Language models could learn semantics (NYU, Google)

- boring

- Sparks of AGI: Early experiments with GPT-4 (Bubeck)

- Hofstadter paper that Josh Tenebaum talked about (Is there an "I" in AI, April 2023) (can't find it)

- Building machines that learn and think like people (Lake, Tenenbaum)

- Embeddings - OpenAI API

- Embedding just means converting a text string into a point in a high-dimensional space (vector). 1536 and 3072 dimenstions for models "text-embedding-3-small" and -large respectively

- Ah but you can shorten the embeddings via an API paramater or after the fact with some lin alg.

- Question answering using embeddings-based search | OpenAI Cookbook

- Interesting, they recommend preloading relevant docuemnts into the normal queyr process, over fine-tuning.

- Different models have different limits

- And finding these docs via embedding-based search

- Note: PharmaKB semantic search is using this

- Courses (want something like build an LLM):